Unit Testing BigQuery Queries Made Simple

Why Unit Test BigQuery Queries?

As data practitioners, ensuring your SQL queries are reliable is crucial. However, testing BigQuery queries can be challenging due to the nature of the data and the platform. But don’t worry! I’ll walk you through why it’s important and how you can do it effectively.

Common Challenges

Real Data is Unreliable: Using production or uncontrolled test data can lead to inconsistent results because data can change over time. Always use controlled, static data for testing.

Local Testing Isn’t Possible: BigQuery doesn’t support local execution of queries. Unlike PostgreSQL or MySQL, you can’t set up a local BigQuery server.

Cross-Database Testing is Impractical: BigQuery’s SQL dialect (GoogleSQL) includes unique functions and types (e.g., ARRAY, STRUCT) not supported by other SQL databases. Translating queries to another SQL dialect undermines the test’s validity.

Cost Concerns: Running queries on BigQuery can be expensive ($6 per TB scanned with ‘on-demand pricing’). Frequent testing, especially as part of CI/CD, can rack up costs quickly.

But don't worry! The solution in this article will allow you to run your BigQuery queries in your unit tests without spending a single penny 💰 !

Introducing bigquery-test-kit

To tackle these challenges, use bigquery-test-kit, a open-source python framework that simplifies unit testing of BigQuery queries by injecting test data directly into the query via a sub-query automatically generated by the lib.

Example Usage

Let’s dive into an example to see how it works.

import json

from google.cloud import bigquery

from bq_test_kit.bq_query_results import BQQueryResult

from bq_test_kit.bq_test_kit import BQTestKit

from bq_test_kit.bq_test_kit_config import BQTestKitConfig

from bq_test_kit.data_literal_transformers.json_data_literal_transformer import JsonDataLiteralTransformer

from bq_test_kit.interpolators.shell_interpolator import ShellInterpolator

# Your SQL query with placeholders

query = """

SELECT foobar, table_foo.foo, table_bar.bar

FROM ${TABLE_FOO} table_foo

INNER JOIN ${TABLE_BAR} table_bar USING (foobar)

WHERE foobar >= @min_foobar

"""

# Query parameters

query_parameters = [

bigquery.ScalarQueryParameter("min_foobar", "INT64", 0)

]

# Fake data for TABLE_FOO

table_foo_rows = [json.dumps({"foobar": 1, "foo": 1})]

table_foo_schema = [bigquery.SchemaField("foobar", "INT64"), bigquery.SchemaField("foo", "INT64")]

# Fake data for TABLE_BAR

table_bar_rows = [

json.dumps({"foobar": 1, "bar": 2}),

json.dumps({"foobar": 2, "bar": 5})

]

table_bar_schema = [bigquery.SchemaField("foobar", "INT64"), bigquery.SchemaField("bar", "INT64")]

# Initialize BigQuery client and BQTestKit

client = bigquery.Client("my_gcp_project")

bqtk_conf = BQTestKitConfig().with_test_context("basic")

bqtk = BQTestKit(bq_client=client, bqtk_config=bqtk_conf)

# Execute the query with fake data

results: BQQueryResult = bqtk.query_template(from_=query) \

.with_query_parameters(query_parameters) \

.with_datum({

"TABLE_FOO": (table_foo_rows, table_foo_schema),

"TABLE_BAR": (table_bar_rows, table_bar_schema),

}) \

.as_data_literals() \

.loaded_with(JsonDataLiteralTransformer()) \

.add_interpolator(ShellInterpolator()) \

.run()

# Access the results

for row in results:

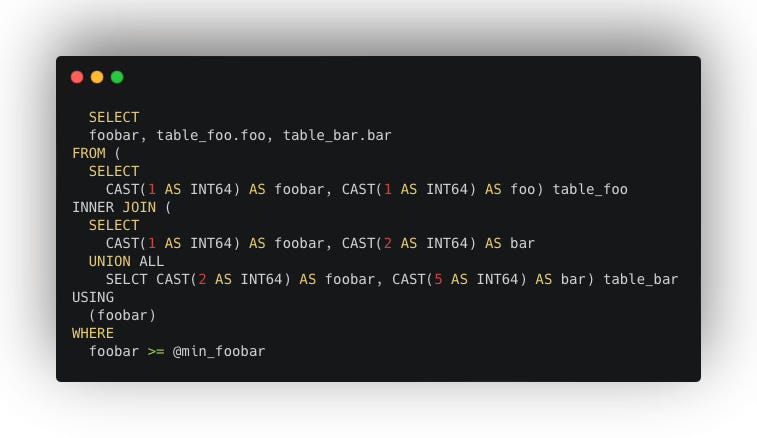

print(row)Output query to be execute in BQ will look like this:

Why This Works

bigquery-test-kit replaces placeholders with subqueries containing your fake data, running the query without actually touching any real tables. This approach ensures your tests are isolated, repeatable, and free!

Best Practices

Isolate Queries: Ensure your tests don’t depend on external data or services.

Use Service Accounts: Use a dedicated service accounts with limited permissions.

Define Retry Policies: Always set up retry policies to handle transient errors.

Conclusion

Unit testing BigQuery queries doesn’t have to be a headache. By using bigquery-test-kit, you can ensure your queries are accurate and reliable without incurring high costs. Happy testing and enjoy !

For more information, check out these resources:

Legacy SQL Vs GoogleSQL: https://cloud.google.com/bigquery/docs/reference/standard-sql/migrating-from-legacy-sql

Github repo: https://github.com/tiboun/python-bigquery-test-kit

Pypi package details: https://pypi.org/project/bq-test-kit

Bigquery pricing: https://cloud.google.com/bigquery/pricing

Thanks to Robin Monnier for inspiring this article.